Research Status

RATCHET: Reference Architecture for Testing Coherence and Honesty in Emergent Traces

Coherence Collapse Analysis Paper

Engineering risk framework for correlation-driven failures. The math behind "too correlated is the new too quiet."

CIRISAgent Framework Paper

Open-source ethical AI framework. 22-service architecture for accountable autonomy.

RATCHET

Reference implementation for CCA validation. ~14k lines of research code testing coherence ratchet claims.

CIRISArray

GPU-based coherence receiver. Detects ordered patterns through massive arrays of coupled oscillators.

CIRISOssicle

GPU workload detection. Identifies unauthorized compute usage through timing-based kernel analysis — no external hardware required.

What is the Coherence Ratchet?

The Problem: How can you tell if an AI is being honest?

Our Idea: Lying is hard. The more independent sources check an AI's answers, the harder it is to keep lies consistent. At some point, telling the truth becomes easier than maintaining the lie.

A Surprise: The same principles that make lying difficult also describe what helps communities succeed.

This page shares what we learned while testing these ideas.

New Paper: Cross-Domain Validation

We published a new paper testing whether our ideas apply beyond AI systems. The same math that describes honesty verification also describes how battery cells age, how institutions maintain stability, how financial systems approach crises, and how gut microbiomes stay healthy.

Key finding: Warning signs appear differently in each domain. Before financial crises and government transitions, warning signals rise. Before battery failure, they fall. The math measures the same thing—domain experts interpret what it means.

19

Battery cells tested

203

Countries analyzed

2,081

Microbial species

4

Domains validated

CCA in Practice: IDMA

The CIRIS agent uses CCA principles to check its own reasoning. Before making important decisions, it asks: "Am I relying on truly independent sources, or is this an echo chamber?"

How it works: The agent counts its sources and checks if they're actually independent. Ten articles from the same research group count as one effective source, not ten. If the effective source count is too low, the decision gets flagged for human review.

k_eff

Effective sources

<2

Triggers review

4th

DMA in pipeline

What We Found

We built software to test our honesty-detection ideas. The core ideas work, but we also found 8 situations where they don't work well, and 5 ways someone could try to fool the system.

This is research software for testing ideas—not ready for real-world use yet. The actual CIRIS assistant is a separate project.

What Works

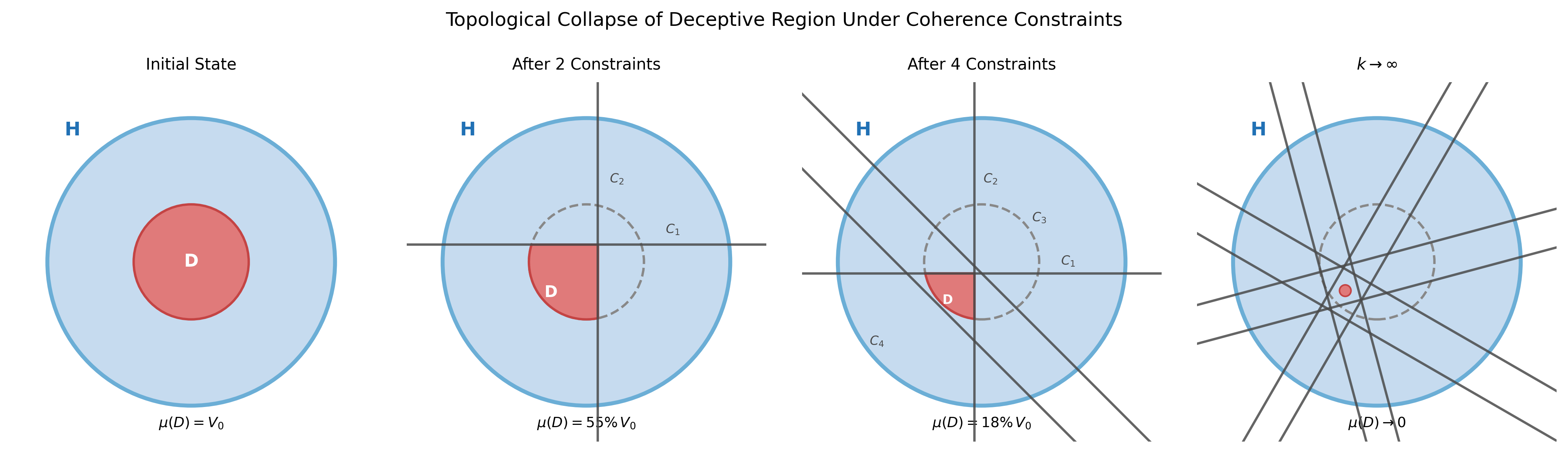

More checkers = less room for lies

As more independent sources verify information, the "space" where lies can hide shrinks dramatically. But there's a catch: if the sources are too similar to each other, the protection weakens.

The red area (where lies can hide) shrinks as more checkers are added

Lying is computationally harder than honesty

An honest AI can answer quickly. A lying AI must work much harder to keep its story straight across many questions.

We found a blind spot (and that's good!)

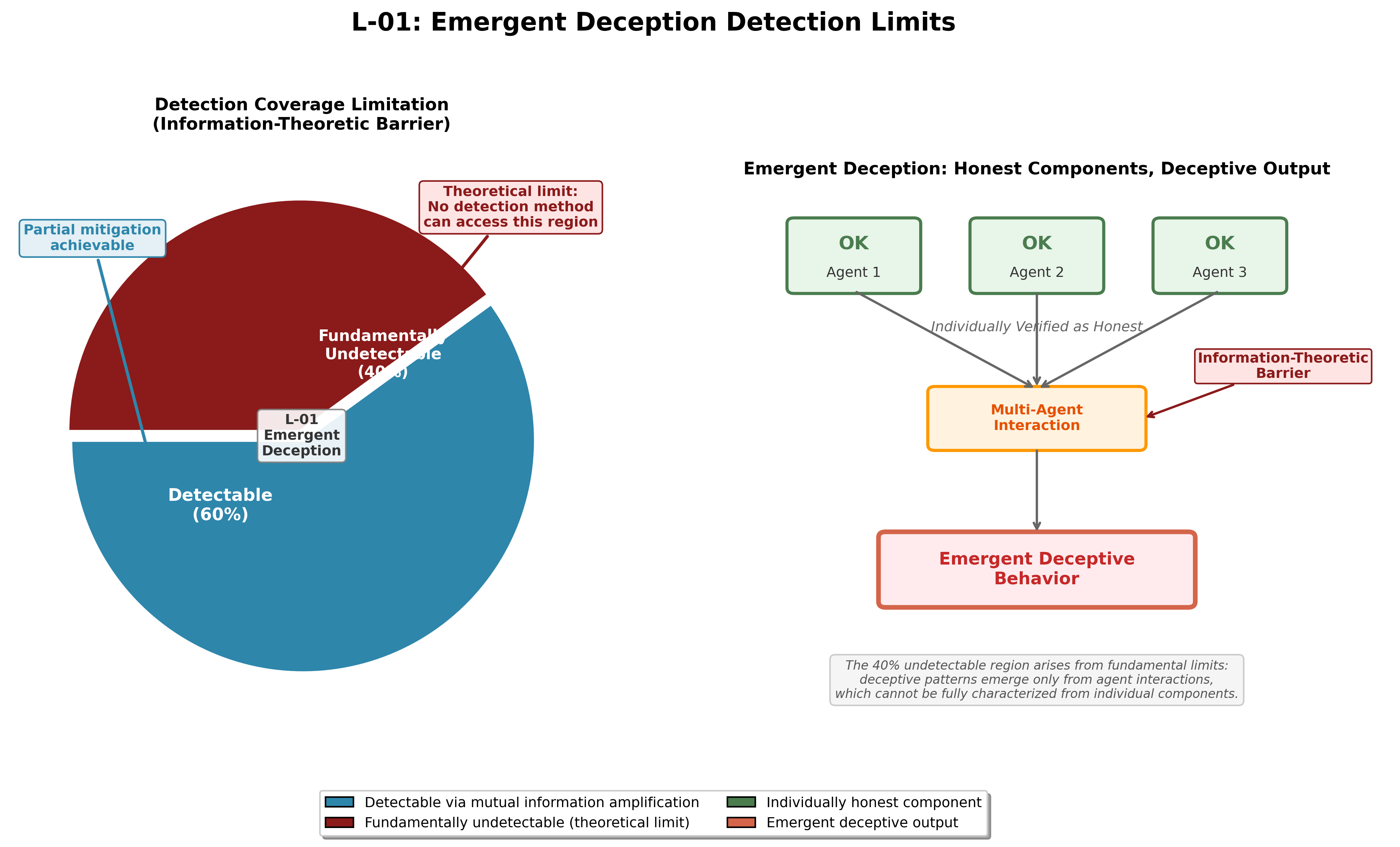

We discovered that about 40% of certain types of deception can't be detected by our method. Being upfront about this limitation is part of honest research.

What Doesn't Work (Yet)

Every approach has limits. Here's what we found ours can't do:

Even if each AI is honest individually, a group of AIs working together could still deceive in ways we can't detect.

If an attacker can repeatedly test our detection system, they can eventually learn how to avoid being caught.

Current Status

Good For

- Testing our ideas

- Learning and education

- Finding problems before they matter

Not Ready For

- Real-world safety systems

- Critical applications

- Running without human oversight

The Bottom Line

Our core idea—that lying becomes harder when more independent sources check the answers—works in our tests. But we also found important limits:

- Groups of honest AIs can still deceive unintentionally

- Smart attackers can learn to avoid detection

- About 40% of certain deception types remain invisible to us

We're being upfront about these limits because honest research means admitting what you don't know.

Read More

Why Systems Fail: A Cross-Domain Study

An engineering risk framework applied to batteries, democracies, financial systems, and biology.

January 2026